The Arctic Region Supercomputing Center (ARSC), located on the Fairbanks campus of University of Alaska, is converting its storage infrastructure from one based on Cray's Data Migration Facility, to that of Sun Microsystems SAM-QFS product. This paper includes a brief description of ARSC and some of the work performed on their machines, the storage facilities ARSC has used in the past, why we are changing, the goals for the new systems and where we are in this transition. Finally, some performance data is included.

The Arctic Region Supercomputing Center, located on the campus of the University of Alaska Fairbanks, supports computational research in science and engineering with emphasis on high latitudes and the Arctic. The center provides high performance computational, visualization, networking and data storage resources for researchers within the University of Alaska, other academic institutions, the Department of Defense and other government agencies. The Arctic Region Supercomputing Center (ARSC) is a Allocated Distributed Center in the Department of Defense's High Performance Computing Modernization Program.

Most of the HPC systems are shared resources of the DoD High Performance Computing Modernization Program and the University of Alaska. Areas of research include regional and global climate modeling; ocean circulation and tsunami modeling; proteomics and genomics; the physics of the upper atmosphere; permafrost, hydrology and arctic engineering; volcanology and geology; arctic biology and art.

There are now a wide variety of HPC machines at ARSC. There is a fully-populated Cray SV1ex with 32 vector processors, 32GB memory, 32GB SSD, and 2 TB disk. Also from Cray is a T3E900 with 272 processors, 26GB memory, and half a TB of disk. ARSC and Cray are jointly managing an onsite Cray SX-6 with 8 vector processors, 64GB memory and a TB of disk. We have an IBM SP with 200 processors, 100GB memory and 1.2 TB disk. Finally, we have an IBM P690 Regatta with 32 processors, 64 GB memory, 1.4 TB of disk.

Coming over the course of this year is a Cray X1, with 128 processors, 512GB memory and 24TB disk, and a combination of IBM P690s and P655s that will add up to a 5TFLOP peak capability. These new systems will increase our peak capability by nearly 10 times.

Since the start ARSC has based its storage on Cray's Data Migration Facility (DMF). For those not familiar with DMF, it is a hierarchical storage manager (HSM) that extends the disk filesystem onto tape or onto a filesystem on a network-connected computer. If so configured, DMF will manage filesystems such that adequate free space is always maintained. This is done by releasing the data blocks of files that have been copied onto the migration media. Access to those files can be automatic or explicit, the users does not need to be aware that DMF is running.

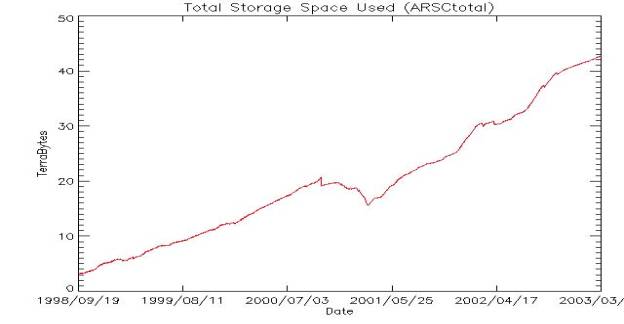

Since 1993, ARSC has had a Cray PVP machine running DMF, with DMF migrating files to tape. We've always kept 2 copies on separate media so as to guard against a tape failure. Tape media we've used over the years include 3480, Redwood, 9840 and 9940. Figure 1 shows total data growth at ARSC since 1998.

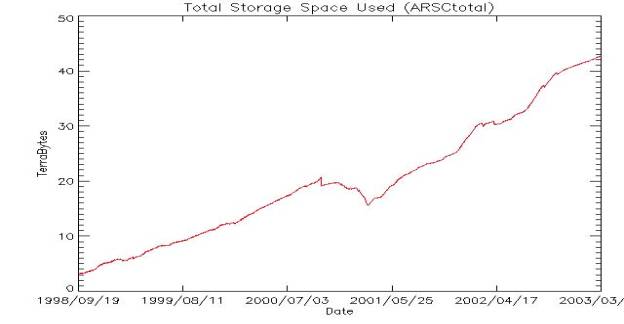

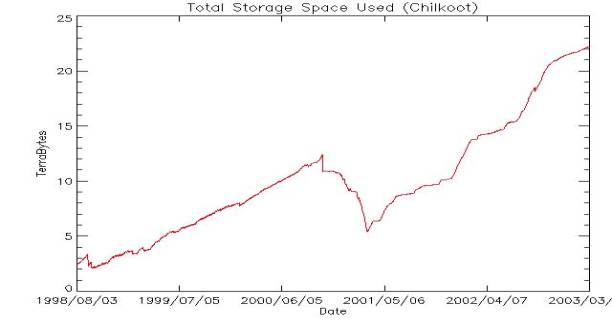

After acquiring a Cray T3E in 1997, we began DMF service on that machine. The initial migration media was using FTP over a dedicated HIPPI link to the Cray J90 (chilkoot). Eventually the volume of data on the T3E (yukon) became so great we decided to attach tape drives directly. The big dip in the total growth (figure 1) and SV1 growth (figure 2) starting in September of 2000, was when we moved the data the SV1 was storing for the T3E to the T3E.

The growth history of chilkoot between 1998 and mid-2000 was dominated by the T3E data. Once the T3E began its own tape service, the growth on what was by then an SV1 became spikier, with the periods of faster growth correlating to activity by our largest vector user.

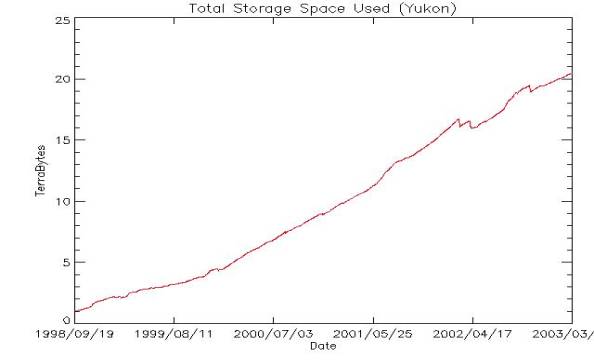

Growth on the T3E (figure 3) has been comparatively constant, dominated by our largest T3E user. The change in slope around the beginning of 2000 was when this user changed from an 18km grid to a 9km grid on his ocean/ice model.

In 1997, ARSC had a Y-MP running DMF. We had just acquired the T3E, which replaced the Cray T3D. We knew we would be maintaining a relationship with Cray for some time. Plans for DMF version 3.0 sounded quite promising, with 3rd party transfers, some amount of support for heterogeneous clients. Given this, we chose to stay with Cray DMF.

The plans for DMF never quite came to fruition. In fact, Cray has now stated they will not develop DMF beyond the version 2.5 currently running on Unicos and Unicos/mk. And those operating systems themselves are reaching end of life. In this regard, ARSC has no choice but to move storage to another platform.

Until the acquisition of the IBM SP, for the most part storage for ARSC users was on the machine on which they did their processing. Users loved it. But now ARSC has 5 major HPC systems of 3 different manufacturers and will soon have two more major systems. Given this, we believe it is time to separate the storage from the compute so that the newer compute technologies can come and go without impacting the storage infrastructure.

Storage technology is maturing such that standards for fibre channel and the like are becoming more consistent and performance is good. There are even a few examples of filesystems that can participate in a heterogeneous SAN, though this area of technology still needs improvement.

Finally, for years ARSC has exported two fileystems from the SV1 to the SGI workstations ARSC maintains on campus. This extended the benefits of DMF to those users. These SGI systems are accessible to all users of ARSC but are not considered DoD resources. No DoD-sponsored users have access to these filesystems and users using these filesystems must agree to not store DoD-sensitive data on them. By contrast, users using the HPC resources identified as DoD resources must have passed a National Agency Check. The DoD, while they have not identified any vulnerabilities in this arrangement, has insisted that we end this practice as it makes part of a system holding sensitive data accessible to users who have not passed a National Agency Check.

First, ARSC wanted reliable, maintainable systems to dedicate to storage. We have chosen 2 Sun Microsystems Sun Fire 6800 systems. Each of these systems has 8 900MHz UltraSPARCIII processors with 16GB memory, 10.5 TB of raw fibre channel RAID disk. Attached to each of these systems are 6 STK T9840 and 4 STK T9940B tape drives. Both disks and tapes are accessed via Sun-branded Qlogic fibre channel switches. The systems will SAM-QFS for the HSM.

While not a true high-availability configuration, our 6800s are configured to be fault-tolerant by having dual paths to disk and networks, and enough tape drives, so that failure on any given path will not significantly degrade service. In addition, the Sun Fires have features that allow some degree of maintenance while the systems are in production. For example, under some circumstances, CPUs, memory and I/O cards can be quiesced and replaced while in production.

In addition, we acquired a Sun Fire 4800 to serve as a test platform.

In choosing these systems, we wanted high performance, but we didn't want to be on the 'bleeding edge' of technology. The Sun Fire systems have been on the market for a while now and are well regarded.

The aggregate bandwidth to disk on each 6800 is 800MB/s in redundant configuration. Aggregate native bandwidth to tape is 234MB/s.

Similar systems are in use at other HPCMP sites, so we had some confidence in the solution. In addition, having a similar system meant we would be more compatible with them should the need arise to more actively support each other.

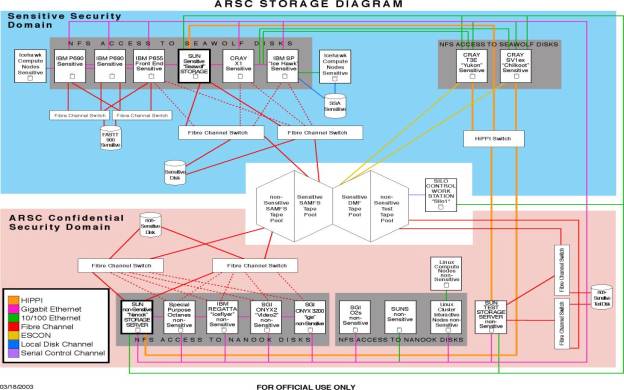

Two systems allows us to separate the DoD sensitive data onto one, and the other data onto the other server. We are logically dividing ARSC into two 'security domains', with the DoD-sensitive machines being served by one 6800 and the rest of the machines being served by the other.

We wanted systems that would scale in capacity and bandwidth. While the 6800s have been initially sized with the new multi-teraflop systems that are arriving soon, they do still have room to grow. They can accommodate more processors and faster processors. More memory can be added if needed. There is still some room to add more I/O also.

Obviously, we want the systems to be productive for our users. And this change will not be transparent to users. So we re-evaluated our user environment and decided to come up with a standard set of environment variables, which we'll encourage users to use.

$HOME is the user's default login directory. $HOME is platform-specific and in all cases except the SGIs, it is local to the machine. There is a small disk quota on $HOME, and it is intended that users will keep initialization files, source and binary code here, but not data.

All systems will have a $WRKDIR, which is a large, local, purged, temporary area where users should keep their data for the duration of their job. By default, users will have a 10GB quota here, although we raise the quota for users who demonstrate the need.

$ARCHIVE is for long-term storage of user data. These filesystems will be exported from the 6800s to the machines within that security domain. $ARCHIVE will be large, but will still be quota-controlled so that runaway jobs don't fill the filesystem.

We may offer other special purpose filesystems on the Suns, like we sometimes do on the HPC systems. These filesystems usually are oriented for high-bandwidth or high-volume (or both) users. Giving these users a separate filesystem benefits both them and the rest of the users by reducing the competition for the disk heads.

Finally, we see new opportunities that weren't possible before. For example, serving data to the web from disk on the ARSC- confidential system is something we could never do with the data on the Crays.

Figure 4 is a graphic showing the separate security domains and the network and SAN connections within and between the domains. The dotted lines from the fibre channel disk to the Sun Fires represent the possibility of using heterogeneous SAN filesystems, but this is something we are not pursuing at present.

DMF has a comparatively limited set of features, and it is relatively simple to configure. SAM-QFS provides more features, more flexibility, and comes with the price of more complex setup. This can be overcome over time; the initial learning curve is steep.

DMF has a small support team and they have been working on it for year, and the product hasn't changed much. SAM-QFS was originally an LSCi product, and Sun bought LSCi. Some of the front line support people at Sun have limited experience with HSM in general and SAM-QFS in particular. The back line people at Sun are quite good.

This is a new skill set for ARSC. The new STK drives are unfamiliar to some within Sun too. We had one person at Sun tell us that the B drives should be translated loop devices, counter to the STK recommendation they should be full fabric. We later found written documents from Sun that confirmed that the STK B drives should in fact be connected to full fabric switch ports.

ARSC users liked having their storage on the compute platform, but with the growing number of different brands of computers, ARSC cannot maintain this model. So the change will not be transparent to users. ARSC must do active education as well as make scripts and build environments that make using the separate storage as painless as possible.

Most of the data on the Crays will be moving to the sensitive Sun. This data will be moved using the migration toolkit from Sun. Using this toolkit involves scanning the source filesystems on the Crays and saving inode information required to create inodes in the SAM-QFS filesystem on the Suns. These inodes have a foreign media type specified. When one of these files is accessed, SAM realizes from the foreign media type that it must retrieve the file from the Cray. Once the file is retrieved and archived on tape media on the Sun, the media type is change accordingly and all subsequent stages of that file come from local tape.

About 2 TB will be moving to the ARSC Confidential server. We plan to move these files in a more conventional manner because the number of users is low as is the data volume.

We're estimating the migration will take 6-12 months once the transfer begins.

On the Sun systems, we have them mostly ready to go. The ARSC custom software is installed and working. We have all the networks working, however trunking is not supported yet on our copper gigabit ethernet cards. HIPPI is working though we should note Sun is only supporting that in the Solaris 8 environment.

SAM-QFS has had a number of problems. We had some initial configuration problems that we've fixed and there is one outstanding problem we're still testing. We tried the System Error Facility (SEF), new in version 4.0. We later learned nobody had tried SEF before, and we found these caused some write errors on tapes. So we turned off that feature. We are currently trying to solve or work around an obscure positioning error that can result in a tape file being overwritten. It seems to be caused by a combination of errors in SAM and the st driver. We are testing a workaround now.

The migration toolkit is ready for testing.

The STK tape drives are working as advertised.

To test capacity, we wrote 128MB files to fill up a T9840 and a T9940B. These files contained random data. Compression on the tape drives was turned on and 256K tape blocks were used. The T9840 capacity was 27.0GB and the T9940B held 280.5GB.

Figure 5 shows the write performance of T9840 and T9940B. The T9840 tops out at about 24MB/s, the T9940B approaches 35MB/s for write. The T9940 performance dropped off some beyond a 1 GB file. This is in part due to the tape changing directions for recording. It was also affected by another process on the system working at intervals on the same RAID array. This test used random data and excludes mount and positioning time.

Figures 6 & 7 show read performance on large files, again assuming mounted and positioned tape. Figure 6 shows the time to read files. A 1 GB file stage took about 20 seconds on T9940B, almost 40 seconds on T9840. Depending on where the file is located on tape, somewhere in the 1 to 2 GB file size is where T9940 starts to outperform T9840, including mount and positioning. Figure 7 shows read performance on T9840 is between 20 and 25MB/s, while T9940B is between 42 and 44MB/s.

Figures 8 & 9 show time to stage a 128MB file from the two media types including mount and tape positioning. Depending on tape position, stages from T9840 take between 20 and 60 seconds. Stages from T9940B take between 25 and 115 seconds.

We've only performed limited testing on the Sun T3+ disk so far. Figure 10 shows write performance on a single controller using RAID 5 in a 7+1+1 configuration. The 512K file used a 512K block, all others used a 1MB block. Write bandwidth ranged up to just over 80MB/s. Reads went on the high end, topping out at just over 90MB/s.

Nathan Bills, one of our network analysts, has done some testing of the networks using iperf. On the gigabit ethernet, he has seen up to 940mbit/s using 12 input streams from 3 separate SGI systems. One input stream from our Onyx 3200 transferred at 579mbit/s. 18 input streams from the Onyx 3200 topped out at 706mbit/s. The gigabit ethernet is not using jumbo frames, as Sun doesn't officially support that at this time.

On the HIPPI, tests between the Cray SV1 and one of the 6800s showed one stream going at 268mbit/s, two streams at 521mbit/s and more streams leveled off at 575mbit/s.

Here are some comparisons between the SAM-QFS and the DMF products and their environments.